If the earlier parts of this series explained how to build AI voice agents, this one explains why most of them fail.

Not because the models aren’t good enough.

Not because voice is “too hard.”

But because teams underestimate two things:

Not because voice is “too hard.”

But because teams underestimate two things:

- Guardrails

- Business knowledge

Get either wrong, and the agent becomes a liability.

Get both right, and it becomes infrastructure.

Get both right, and it becomes infrastructure.

Why Voice Agents Need Stronger Guardrails Than Chatbots

Text chatbots can get away with pauses, edits, and rewrites.

Voice cannot.

Every mistake is heard in real time.

Every hesitation feels awkward.

Every hallucination sounds confident.

Every hesitation feels awkward.

Every hallucination sounds confident.

That means guardrails in voice systems aren’t optional safety features. They are part of the interaction loop itself.

Guardrails Are Not Just “Safety”, They’re Interaction Control

Most people think guardrails are about stopping bad answers.

That’s only half the story.

In voice systems, guardrails also control when the agent speaks, when it stops, and when it exits the conversation entirely.

1. Interruption & Barge-In Sensitivity

The most important real-time guardrail is knowing when to shut up.

Technically, this means:

- Detecting a TurnStartedEvent

- Immediately stopping Text-to-Speech (TTS)

- Flushing audio buffers

- Returning to a listening state

But tuning matters:

- Too sensitive → background noise cuts the agent off

- Not sensitive enough → agent talks over the user

2. Abuse, Resource Protection, and Cost Control

Voice agents are vulnerable in ways chatbots aren’t.

A malicious (or bored) user can:

- Keep the agent talking endlessly

- Ask irrelevant questions

- Drain inference credits deliberately

Real guardrails include:

- Maximum call duration

- Irrelevant-question counters

- Automatic hang-up logic

- Graceful refusal responses

This isn’t about user experience, it’s about protecting infrastructure.

3. Hallucination Control and “I Don’t Know” Enforcement

One of the hardest guardrails to implement is forcing the agent to admit ignorance.

Without it, voice agents will:

- Invent policies

- Guess personal details

- Confidently fabricate answers

If the answer isn’t in the business knowledge:

“I don’t have access to that information.”

That response must be forced, not optional.

Some platforms achieve this by breaking conversations into blocks or checklists, limiting how much the LLM can improvise at any step.

4. Privacy, Compliance, and Enterprise Reality

Once voice agents enter real businesses, compliance shows up fast.

Guardrails must account for:

- PII redaction in call logs

- Masking names, phone numbers, payment details

- Role-based access control (RBAC)

- Encryption at rest

- SOC 2 / GDPR expectations

None of this is “AI magic.”

It’s systems engineering.

It’s systems engineering.

Business Knowledge: What Makes the Agent Useful (or Dangerous)

A voice agent without business knowledge is just a conversational toy.

A voice agent with uncontrolled knowledge is worse.

This is why Retrieval-Augmented Generation (RAG) sits at the center of every serious deployment.

Knowledge Is Retrieved, Not Trained

Modern voice agents do not retrain models every time data changes.

Instead:

- Business documents are embedded into vectors

- A similarity search retrieves relevant chunks

- Only those chunks are passed to the LLM

This allows:

- Immediate updates

- Scoped answers

- No retraining cycles

The retrieval itself must be fast. Optimized systems can perform vector search in single-digit milliseconds, because total response time still has to stay under the ~800ms conversational threshold.

Source Quality Directly Affects Voice Quality

This is a subtle but critical point.

If your knowledge base is built from:

- Poor OCR

- Messy PDFs

- Bad transcripts

Teams building production voice agents invest heavily in:

- Clean source documents

- Domain-specific speech recognition

- Structured SOPs instead of raw text dumps

Semantic Caching: Consistency and Speed

Voice agents hear the same questions repeatedly:

- “What are your hours?”

- “Do you offer emergency service?”

- “How much does this cost?”

Semantic caching solves two problems at once:

- Consistency

The answer has already been vetted. - Latency

The system bypasses both the LLM and TTS layers and plays a pre-generated response.

This can shave hundreds of milliseconds off common interactions and dramatically improves perceived intelligence.

Business Knowledge Must Be Actionable

Static answers aren’t enough.

Production agents connect to:

- CRMs (Salesforce, HubSpot)

- Calendars

- Inventory systems

- Ticketing platforms

If the system switches models mid-conversation (for speed vs reasoning), the session context must persist. Otherwise, the user is forced to repeat themselves, and trust is lost instantly.

Persona Is a Guardrail Too

The system prompt defines the business voice.

This is not branding fluff. It’s operational control.

Good prompts specify:

- Tone (concise, friendly, formal)

- Forbidden behaviors

- Speech constraints (no markdown, no emojis, TTS-safe phrasing)

- When to escalate or end a call

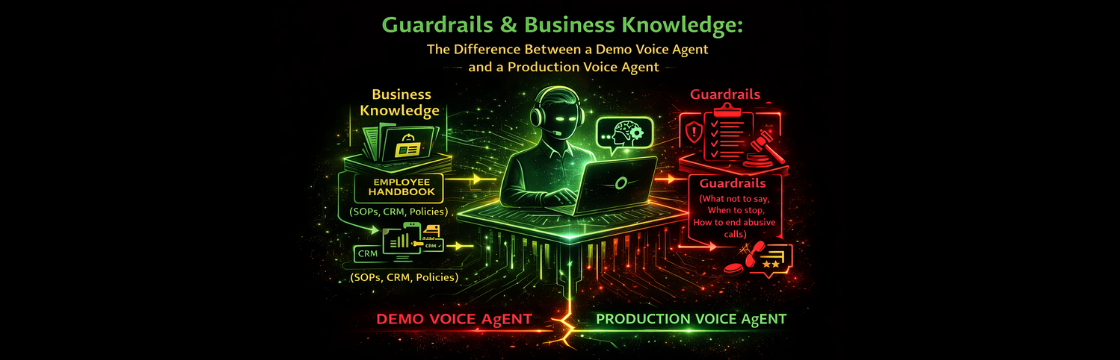

The Analogy That Actually Works

Think of your AI voice agent as a new customer service employee.

- Business Knowledge is the employee handbook and computer terminal (policies, SOPs, CRM access)

- Guardrails are the code of conduct and HR rules (what not to say, when to stop, when to hang up)

Final Takeaway: This Is Where Voice Agents Become Real

At this point in the series, the pattern should be clear.

Voice agents fail when teams focus on:

- Models

- Voices

- Demos

They succeed when teams focus on:

- Constraints

- Knowledge boundaries

- Latency budgets

- Operational safeguards

Guardrails + business knowledge are not features.

They are the difference between experimentation and production.

They are the difference between experimentation and production.

If you get this layer right, everything else compounds.

And if you don’t, no model upgrade will save you.